|

|

|

|

|

Hans Moravec Hans Moravec is a Principal Research Scientist at the

Carnegie Mellon University Robotics Institute. Moravec,

whose interest in robots extends back to his childhood,

discusses his intriguing and personal views on

robots—from the current state of technology, to

today's bomb defusing machines, to the capabilities of

robots in the next century. NOVA spoke to Dr. Moravec in

October, 1997.

Hans Moravec is a Principal Research Scientist at the

Carnegie Mellon University Robotics Institute. Moravec,

whose interest in robots extends back to his childhood,

discusses his intriguing and personal views on

robots—from the current state of technology, to

today's bomb defusing machines, to the capabilities of

robots in the next century. NOVA spoke to Dr. Moravec in

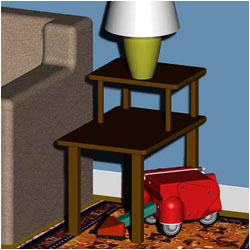

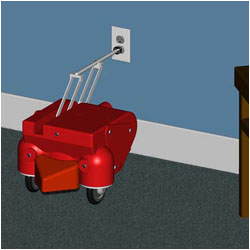

October, 1997.NOVA: Can you give me a good working definition of what a robot is and how it differs, say, from a machine tool or a computer? HM: Robots are machines that are able to do things that previously had been associated only with human beings or animals—such as the ability to understand their surroundings and plan their actions. NOVA: Most hazardous duty and bomb disposal robots seem to be tethered in some way to a human operator—either by an actual cable or by a radio signal. Do they qualify as robots in your mind? HM: Not really. We call remote control devices "robots" because I guess they have some of the characteristics of autonomous machines, at least physically. The research going towards those machines does contribute to the research towards autonomous machines, but they're lacking the brains. They have the bodies of robots, but not the brains. NOVA: I read an interview in which you said, "Today's best robots can think at insect level." HM: A few years ago that was correct. In fact, we're probably a little above insects now. I make a connection between nervous systems and computer power that involves the retina of the vertebrate eye. The human eye has four layers of cells which detect boundaries of light and dark and motion. The "results" are sent at the rate of ten results per second down each of those millions of fibers. It takes about a hundred computer instructions to do a single edge motion detection. So you've got a million times ten per second, times a hundred instructions; that's equivalent to a billion calculations per second in the retina. That is a thousand times as much computer power as we had for most of the history of robotics and artificial intelligence. So we were working with one-thousandth the power of the retina, or roughly what you might find in an insect. What happened by the end of the '80s is the cost of one MIPS [the standard by which computing power is measured] dropped down to $10,000 or below. And at that point the power available to individual research projects started climbing. The climbing rate is pretty amazing because computers have been doubling in power. It was once about every 24 months. In the '80s it was once about every 18 months. Recently it's been closer to every 12 months. So we now have in our research projects machines that can do 300 MIPS, and soon we're going to have a thousand. So we're at the stage of small vertebrates. Over the next few decades, the power is going to take us through small mammals and large mammals. I have a detailed scenario that suggests that we get to human level, not just in processing power, but in the techniques by the middle of the next century. NOVA: Can you walk us through what you think robot evolution will look like?  HM: Well, I imagine four stages. I think we're just on

the verge of being able to see machines that work well enough

that they'll become the predecessors to the first generation

of mass-produced robots—that are not toys. I have a

particular one in mind: a small machine that could be a robot

vacuum cleaner which, with a thousand MIPS of computing, is

able to maintain a very dense three-dimensional map or image

of its surroundings. It will be able to both plan its actions

and to navigate, so that it knows at every moment where it is

and is even able to identify major pieces of furniture and

important items around it. So—a small machine, small

enough to get under things and to find its own re-charging

station and to empty out its accumulated dust from time to

time. That's the research we're doing and I think sometime

within the next five to ten years we'll have something like

that—and its successors will become a little more

capable. They'll have a few more devices and be programmable

for a

HM: Well, I imagine four stages. I think we're just on

the verge of being able to see machines that work well enough

that they'll become the predecessors to the first generation

of mass-produced robots—that are not toys. I have a

particular one in mind: a small machine that could be a robot

vacuum cleaner which, with a thousand MIPS of computing, is

able to maintain a very dense three-dimensional map or image

of its surroundings. It will be able to both plan its actions

and to navigate, so that it knows at every moment where it is

and is even able to identify major pieces of furniture and

important items around it. So—a small machine, small

enough to get under things and to find its own re-charging

station and to empty out its accumulated dust from time to

time. That's the research we're doing and I think sometime

within the next five to ten years we'll have something like

that—and its successors will become a little more

capable. They'll have a few more devices and be programmable

for a

broader range of jobs until, eventually, you get a first

generation universal robot, which has mobility and the ability

to understand and manipulate what's going on around it.

broader range of jobs until, eventually, you get a first

generation universal robot, which has mobility and the ability

to understand and manipulate what's going on around it.NOVA: What do you mean by universal robot? HM: It's a machine which can be programmed to do many different jobs. It's analogous to a computer, which is a universal information processor, except that its abilities extend to the physical world. NOVA: Okay, so we've got the first generation of universal robot. HM: Right, the time schedule is around 2010 now. Every single job a robot needs to know has to be built into the application program and when you run the program, the robot acts in a pretty inflexible way. Still, it's perceptual in motor intelligence. It's comparable to maybe a small lizard. NOVA: What types of tasks might we expect these robots to do? HM: Well, things like floor cleaning and perhaps other kinds of dusting—delivery. The kinds of factory tasks that robots are now doing should be possible for universal robots, like the assembly of things. But because this kind of robot should be mass-produced, the range of tasks will be probably larger than anything that exists today, and the machines will be cheap enough to be used in places that you can't use robots today. I imagine car cleaning tasks and bathroom cleaning and lots of other things that will depend on the ingenuity of the programmers. NOVA: What happens next? HM: All right, so now we come to a second generation. The second generation machines will have a computer that is maybe 50 times as powerful as the first generation and is able to host programs that are written with alternatives. For example, picking up an object, which might be part of some big job, could be done with one hand or with another hand of the robot. Each of the alternatives has associated with it a number, which is the desirability of doing the step that way, as opposed to doing it an alternative way. Those desirability numbers are adjusted based on the robot's experience. And the robot's experience is defined by a set of independent programs that are called conditioning modules, which detect whether good things or bad things happen. For instance, you might have one module that responds to collisions that the robot undergoes, and produces a signal that says, "Something bad happened." Another one detects if the task the robot was doing was finished, or finished particularly quickly, and signals that something good happened. If the batteries are discharged—that's bad. Perhaps if they're kept in a good state—that's good. Gradually the robot adapts, because of this internal conditioning, to do things in ways that work out particularly well and avoid ways that have caused trouble in the past. NOVA: So it's capable of rudimentary learning. HM: Yeah, it's conditioning. And, you can even imagine these conditioning modules being tied to external advice. For instance, one module might respond to your saying, "good." And another one to your saying, "bad." And so you can direct the robot to act a certain way, as opposed to another. You could, if you wanted to, train it in the way that you might train a dog—by repeatedly saying "good." It's Skinnerian training basically. NOVA: What sorts of tasks would the second generation robots be able to do, that the first generation ones wouldn't be able to do? HM: They'll be used presumably in the same kinds of situations but they'll be much more reliable there. They'll be much more flexible. Take a first generation robot that's putting away the dishes. Maybe it's programmed to always grab certain objects in a certain way. And it has motion planning and collision avoidance. But still, some things may have been overlooked in that program. And perhaps in your particular circumstance, whenever it goes into a certain cabinet, it ends up always catching its elbow on the door. The first generation robot will never learn. It will just keep making that same mistake over and over and over again. The second generation robot will gradually learn to do things a different way, maybe use a different arm or reach in a different manner. Essentially the robot will tune itself. And it will be much more pleasant to have around, because it won't be making lots of little mistakes that the first generation robot will be. NOVA: What year is it now? HM: Approximately 2020. Each one of these generations is about a decade. The first generation robot may be comparable to a small lizard. The second generation robot, with it's limited trainability, may be something comparable to a small mammal. The third generation robot is the first really interesting one. It's predicated on there already being quite a large industry, based on these earlier generations, which is able to support the development of a major module for these machines—the ability to model the world, a "world simulator." This simulator allows the third generation robot to make many mistakes in its mind, running through scenarios in simulation rather than physically. The second generation robot learns, but quite slowly. It has to make a mistake many times before it learns to avoid it. And when it's tuning up, when it's getting good at something, it has to do it many times before it really really gets good at it. The third generation robot runs through the task many times, mentally, and tunes it up there. So when it first goes to do something physically, it has a good chance of doing it right. NOVA: It thinks before it acts. HM: Right. And, the simulator is a big deal, because it can't be strict physical simulation. That's why it's still computationally out of reach, even with the kind of computer power, I imagine for then. What it needs is something that's closer to folk physics: basically rules of thumb for every different kind of object that it's likely to encounter—because one of the things the robot will have to do is roll into a new room and make an inventory of everything that it sees around it, so that it can build a pretty accurate simulation of that room, so it can then do its mental rehearsals. It will have to identify the objects that it sees and then call up generic descriptions of what those objects are and how they behave when they're interacted with, and how to use them. Building this generic database is a major effort. NOVA: What sorts of things would it need to know about, say, cutlery? HM: Well, first of all, where cutlery might probably be located, how to pick up the individual pieces, roughly how heavy to expect them to be, you know, how hard they can be gripped. Because, for instance, if it sees an egg and wants to pick it up, it has to know to be gentle with it. If it sees a knife, it has to know in advance to pick it up a little harder because, if it picks it up too lightly, the weight will cause it to fall down. And, of course, it can learn by making actual mistakes, but the whole idea of the simulation is to avoid those mistakes, in the first place, whenever possible. Now, what's really interesting about the third generation robot is that, besides having this physical model for things in the world, it will also have to have a psychological model for actors in the world, particularly human beings. It should know that poking a sharp stick at a human being will produce a change in state of the human being. They will probably become angry and if they're angry, they're likely to do certain things which will probably interfere with the robot's tasks. And, since these robots will probably be used, among other things, as servants working among people, it will be useful for the robot to have an idea of whether its owners are happy or unhappy and choose actions that improve the happiness. Basically, machines that make their owners happier are likely to sell better, so ultimately there'll be market pressure. Third generation robots should be able to deduce something about the internal state of the human beings around it—if a person seems to be in a hurry or if this person seems to be tired. You can probably deduce that from a modest observation of body language. NOVA: They're doing some mood recognition already at the M.I.T. Media Lab, aren't they? HM: Yeah, that's right. Another interesting thing that a third generation robots is able to do, is to provide a description of things. You should be able, with a small additional amount of programming, to generate some kind of a narrative. You may ask, "Why did you avoid going into that room?"—"Because Bob's in there and I know he's upset and my moving around him will probably irritate him further." Now, here's a funny twist. You can have conversations with the third generation robot where it seems to believe that it's conscious; it talks about its own internal mental life in the same ways that the people do. And so, I think for practical purposes, it is. So the third generation robot can analyze. It's comparable to maybe a monkey. When it simulates the world, it's always in terms of particular objects or particular sizes and particular locations. It doesn't really have any ability to generalize. Its ability to understand the world is very literal. NOVA: It sounds sort of sweet. HM: It is. That's right. You wouldn't expect any deviousness at all. NOVA: Take us to the fourth generation robot. HM: All right. Basically, if the third generation robot is something like a monkey, the fourth generation robot becomes something like a human being—actually more powerful in some ways. The fourth generation robot basically marries the third generation robot's ability to simulate the world with an extremely powerful reasoning program. Even today, we have reasoning programs that are superior to human beings in various areas. Deep Blue plays chess better than just about everybody—and various expert systems can do their chains of deductions better than just about anybody. And of course, for a long time, computers have been able to do arithmetic better than everybody, for sure. But there is a certain limitation that these programs embodying intelligence have had, which is they really haven't been able to interact with the physical world. When a medical diagnosis program talks about the symptoms of a patient, it's only processing words. And when it comes up with a recommendation, again, it's more words. NOVA: But now the reasoning will be connected to physical experience or understanding. HM: Right. The reasoning that the fourth generation does is greatly enhanced by the third generation robot's ability to model the world. So physical situations that the robot thinks about in its simulation can now be abstracted into statements about the world. And then inferences can be drawn from the statements, so that the robot can come to non-obvious conclusions. For example, it might be able to figure out from running several examples that if it takes any container of liquid without a lid and turns it upside down, the liquid will spill out. The third generation robot would need to figure out not to turn this glass over, not to turn this jar over, not to turn this pitcher over etc. whereas the fourth generation robot would be able to infer it. That's just a example. Fourth generation robots would be able to do much more complicated tasks—and do them probably better than humans, because really deep reasoning involves long deductive chains and keeping track of a lot of details. Human memory is not that powerful. NOVA: Can you envision, in the future, a robot being better than a human at finding and disarming a bomb? HM: Sure. You can imagine that for the near future. The sensors that a robot can bring can be tuned for the task. For example, radar can penetrate various kinds of materials—depending on the frequency you use, you can see through walls. So, simply the ability to see into a package would certainly make a robot a better bomb detector. NOVA: Can you envision a robot understanding the psychology of a terrorist better than a human being? HM: Well, ultimately. Now we're talking 40 or 50 years from now, when we have these fourth generation machines and their successors, which I think ultimately will be better than human beings, in every possible way. But, the two abilities that are probably the hardest for robots to match, because they're the things that we do the best, that have been life or death matters for us for most of our evolution, are, one, interacting with the physical world. You know, we've had to find our food and avoid our predators and deal with things on a moment to moment basis. So manipulation, perception, mobility - that's one area. And the other area is social interaction. Because we've lived in tribes forever and we've had to be able to judge the intent and probable behavior of the other members of our tribe to get along. So the kind of social intuition we have is very powerful and probably uses close to the full processing power of our brain—the equivalent of a hundred trillion calculations per second—plus a lot of very special knowledge, some of which is hard-wired, some of which we learned growing up. This is probably where robots catch up last. But, once they do catch up, then they keep on going. I think there will come a time when robots will understand us better than we understand ourselves, or understand each other. And, you can even imagine the time in the more distant future when robots will be able to host a very detailed simulation of what's going on in our brains and be able to manipulate us. NOVA: Wow. HM: I see these robots as essentially our off-spring, by unconventional means. Ultimately, I think they're on their own and they'll do things that we can't imagine or understand—you know, just the way children do. Photos: (1) Gene Puskar; (2-3) Jesse Easudes. Future Robots | Hazardous Duty | Robo Clips Resources | Transcript | Bomb Squad Home Editor's Picks | Previous Sites | Join Us/E-mail | TV/Web Schedule About NOVA | Teachers | Site Map | Shop | Jobs | Search | To print PBS Online | NOVA Online | WGBH © | Updated November 2000 |